Every time we are asked "What is Coseer?" we respond that we make software that automates and scales cognitive tasks in enterprise workflows. In most situations, the conversation then moves on to client specific needs. But someone recently asked, what really is Coseer.

Coseer is a set of advanced cognitive tools that, in different permutations, can take on very challenging cognitive problems in client environments. We ingest, aggregate and work with unstructured information, to complete meaningful tasks that would have been impossible or very resource (read human) intensive in absence of technology like ours.

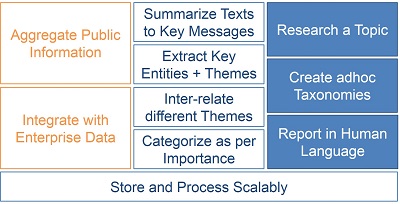

The most basic of these tools are ingesters of enterprise information repositories and aggregators of public information including Internet and social media. While today such ingesters/ aggregators are considered trivial, we have put in a lot of thought in these tools to make the downstream systems more efficient. In most situations Coseer's objective is to ingest huge amounts of information and select specific pieces that solve the given problem. The triage begins with the ingesters.

The ingested data is now available to multiple cognitive tools. Some of the prominent ones include a summarizer that extracts key messages from this data. Our summarizers are different in their focus on context, and the fact that they process corpora together to get more accurate summaries. Other Coseer tools extract key entities from the text. We don't use dictionaries like common NER tools. Yet other inter-relate different themes or categorize pieces of information as per importance to our end users.

Based on these tools, we have developed advanced modules based on these tools to tackle real world challenges. For example the taxonomy module first extracts key themes, inter-relates them, and joins the inter-relation data with importance of messages to create most relevant trees. The research module then summarizes all available information as per such taxonomies to create structured pages. The human language tool uses this research to put a narrative around structured information embedded in the unstructured data. And so forth...

For every client situation we plumb these tools in a different configuration. For example, Coseer Express pipes back information from research module to the categorizer and adds another filter as per importance before reporting to the user. Or, we are developing a sales intelligence tool that takes public and private information, and runs all of it through the research module but does not filter based on time, like the Coseer Express does.

Every situation boils down a few basic cognitive tasks like extracting information from text, identifying key relationships between entities, deduplicating near-similar facts, etc.

Underlying everything is the design philosophy to build highly scalable processes and storage architectures. This is where we are closest to a big data company. Not only do we have to deal with large amounts of information, the demands on latency from our system are also tough. Designing scalable software and parallel processing is the only choice we have.

This approach of scalable individual services, tools and models lets us address very diverse set of problems within enterprises and still stay true to our core mission - to automate and scale cognitive tasks in enterprise workflows. Do let us know if we could help you. And stay tuned with us in our journey - follow us on social media.